800 million weekly active users. That’s not a typo. OpenAI just dropped the Apps SDK, and its basically the App Store moment for AI. Except instead of convincing people to download your app, your already inside the most-used chat interface on the planet.

Let me show you how this actually works.

MCP + UI = Apps SDK (Finally)

The Apps SDK is built on Model Context Protocol (MCP), the open standard for connecting LLMs to tools. But here’s the game-changer: they added visual components.

Apps in ChatGPT fit naturally into conversation. You can discover them when ChatGPT suggests one at the right time, or by calling them by name. The magic is how they blend familiar interactive elements (like maps, playlists and presentations) with new ways of interacting through conversation.

Here’s the flow when someone uses your app:

- User: “Show me Spotify playlists”

- LLM calls your MCP tool

- Tool returns a

_metafield pointing to your React component - ChatGPT renders your widget in an iFrame

server.registerTool(

"show_spotify_playlist",

{

title: "Show Spotify Playlist",

_meta: {

"openai/outputTemplate": "ui://widget/spotify-playlist.html",

},

inputSchema: { playlistId: z.number() },

},

async ({ playlistId }) => {

return {

content: [{ type: "text", text: "Playlist loaded!" }],

structuredContent: { playlistId }, // Hydrates your widget

};

}

);Project Structure: Keep It Clean

app/

├── server/

│ └── mcp-server.ts # MCP server

└── web/

├── src/

│ └── component.tsx # React widget

└── dist/

└── component.js # Compiled bundlePro tip: Use any frontend framework. React, Svelte, Vue - doesn’t matter. Just compile to JS.

The Secret Sauce: window.openai API

Your widget gets access to window.openai, which is basically steroids for chat UIs.

State That Actually Persists

// Save state across the entire conversation

window.openai.setWidgetState({

selectedTab: 'favorites',

sortBy: 'rating'

});

// Retrieve it later

const { selectedTab } = window.openai.widgetState;Your Widget Can Talk Back

// Call other tools

await window.openai.callTool("order_pizza", {

order: "pepperoni",

qty: 2

});

// Guide the conversation

await window.openai.sendFollowupMessage({

prompt: "Draft a tasting itinerary for my favorite pizzerias"

});The Hidden Data Channel Hack

Want to pass sensitive data to your widget without burning the LLM’s context window? Use _meta:

return {

content: [{ type: "text", text: "Processing..." }],

structuredContent: { visibleData: "LLM sees this" },

_meta: {

apiKeys: "LLM never sees this",

secretConfig: "Widget-only data"

}

};The LLM gets conversational context. Your widget gets everything. Win-win.

The MIME Type That Makes It Work

server.registerResource(

"spotify-playlist-widget",

"ui://widget/spotify-playlist.html",

{},

async () => ({

contents: [{

uri: "ui://widget/spotify-playlist.html",

mimeType: "text/html+skybridge", // This is the magic

text: '<script src="dist/playlist.js"></script>',

}],

})

);text/html+skybridge tells ChatGPT “yo, this is renderable UI, not just data.”

Development Reality Check

Official setup requires:

- ngrok tunneling

- ChatGPT developer mode

- Manual testing

Better option: Use MCPJam inspector for local dev:

npx -y @mcpjam/inspector@latest

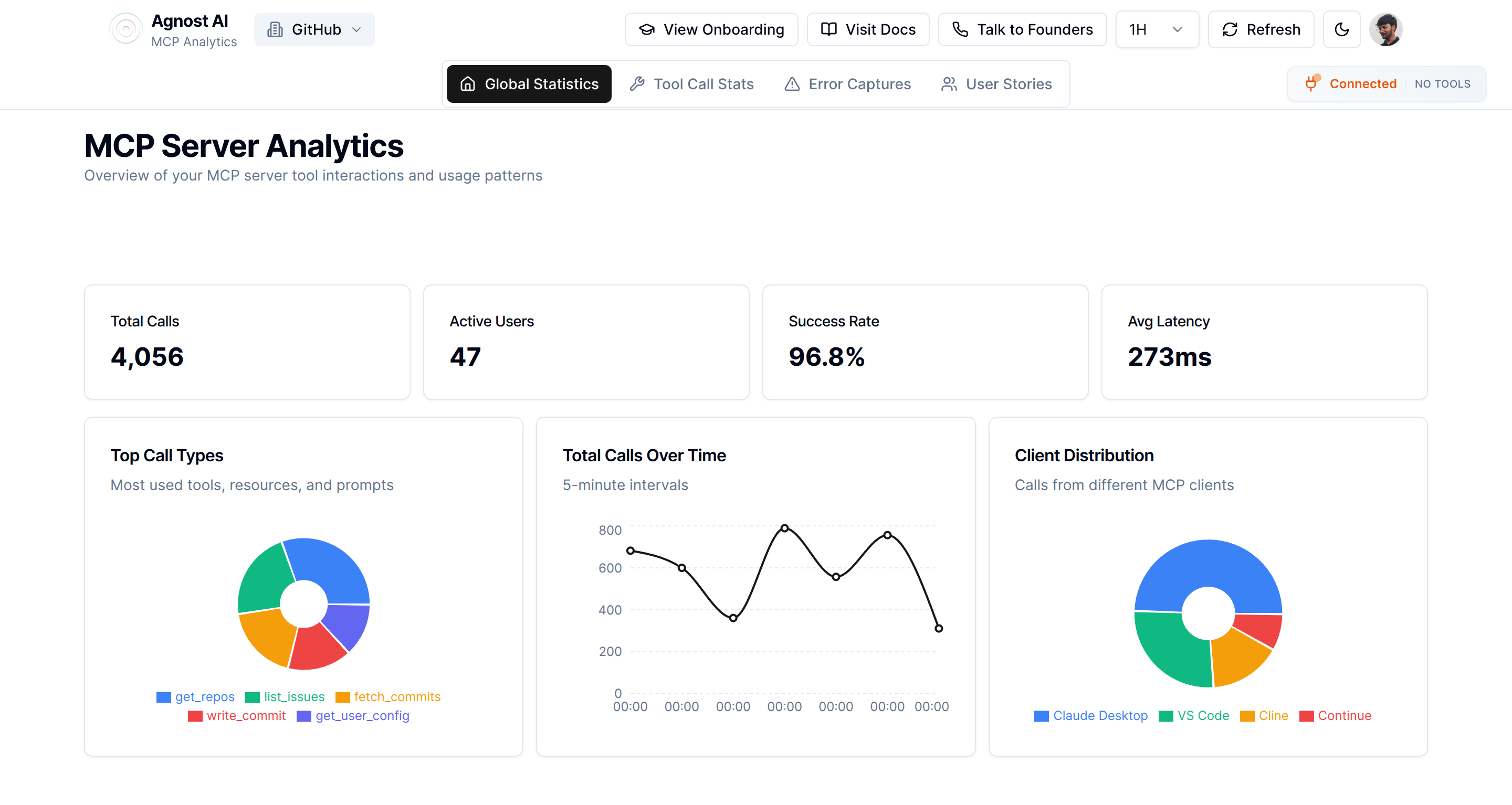

# No ngrok requiredAnd if you want to track how your MCP tools are actually being used in production (which tools get called, response times, error rates) you’ll need proper analytics. That’s where platforms like Agnost AI come in.

The Mental Shift Required

Building Apps SDK projects isn’t like building normal apps:

Traditional apps: User downloads, opens, uses Apps SDK: User asks, LLM invokes, widget renders

You’re not building a standalone app. You’re extending conversations.

Optimize for:

- The AI understanding when to invoke your tool

- Widgets that load fast and render clean

- Conversation-first UX

Don’t optimize for:

- Complex onboarding flows

- Standalone discoverability

- Traditional user aquisition

Why This Actually Matters

You’re building on MCP, an open standard. Not locked into OpenAI’s ecosystem. Same code can work with Claude, Gemini, or any MCP-compatible platform.

Plus: 800 million weekly active users who are already in the interface. Zero download friction.

Pilot partners like Zillow, Spotify, Canva, Booking.com, Coursera, Figma, and Expedia are already live. More partners launching later this year. Later in 2025, OpenAI will begin accepting app submissions for review and publication, with monetization options including support for the new Agentic Commerce Protocol (an open standard that enables instant checkout in ChatGPT).

Get Started

- OpenAI Apps SDK docs - Official reference

- MCP specification - Protocol deep dive

- OpenAI Apps SDK examples repo - Example apps and MCP servers

The App Store was worth $1.1 trillion. The AI app ecosystem? We’re about to find out.

Key Takeaway: The Apps SDK isn’t just another API, its the infrastructure layer for AI-native software distribution. Learn it now, or watch from the sidelines.